Title:Virtual Assist for Blind Person

Displayed Name:pratyush gehlot

| Concept / Overview |

|---|

| For blind person every time one person is required with him, and if he tries to do all things alone then he/she can’t do or he/she may fall into a problem without any assignment. |

Abstract

Project Introduction/Problem Statement:

For blind person every time one person is required with him, and if he tries to do all things alone then he/she can’t do or he/she may fall into a problem without any assignment.

Project Abstract/Methodology/Approach

The main aim of this device is to help a blind person to survive independently. The device can be wearable; it will be with blind person. This device will act as virtual assistance for the person.

Working Principle:

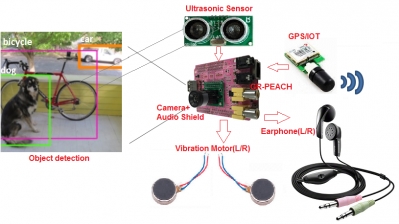

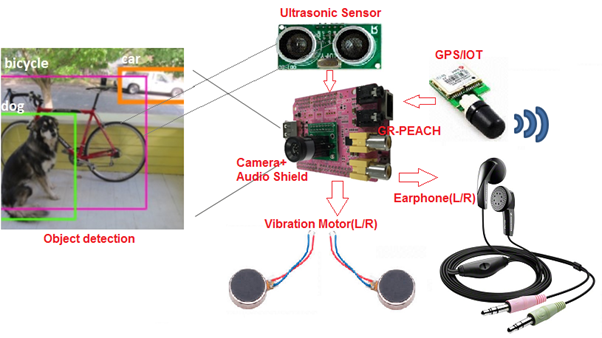

The device will capture images/videos of surrounding objects places, for example road sign, banner, shop names etc. GR-PEACH will analyse the images and based in images/videos it will give surrounding information to blind person via audio.

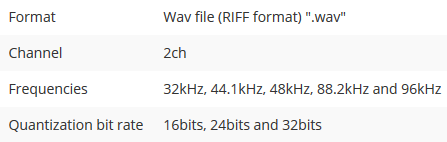

if any obstacle or wall comes closer to him, vibration motor will start vibrating, based on motor vibration speed the object distance can be sensed.

The device will be iot enabled all data can be monitored, if the person need any help or in a problem the device will alarm or send location to respective person.

Project Conclusion

A small wearable low cost and compact gadget that will act as a virtual assistance for a blind person and help him to survive without a continuous support of other person.

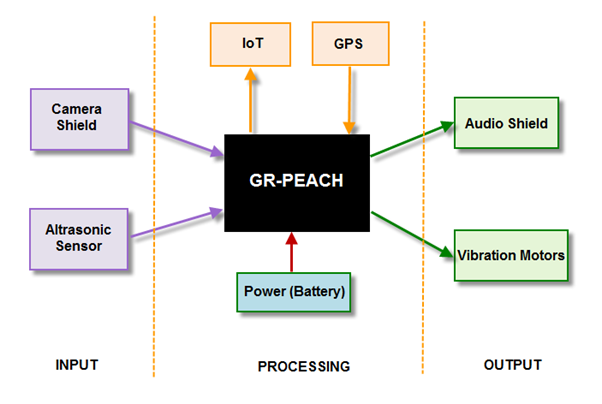

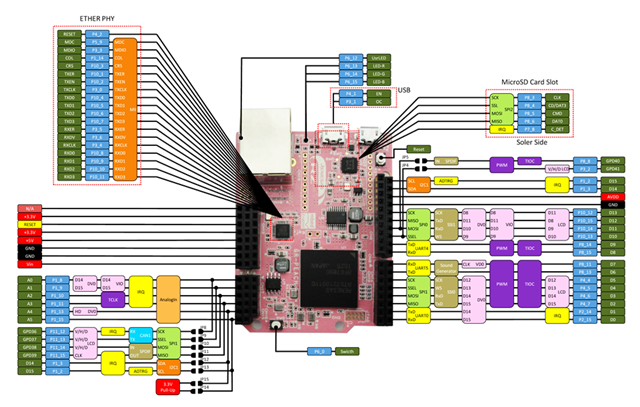

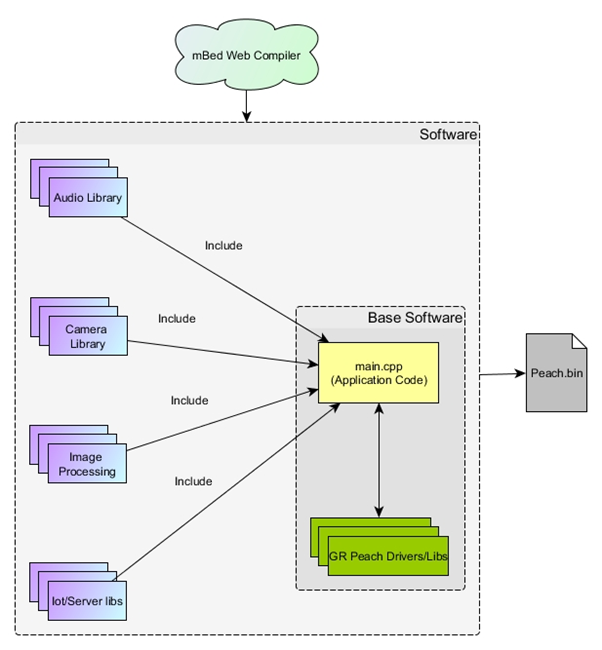

Block Diagram

This is the block diagram for the Project.

The main heart of this project is powerful GR-Peach board which satisfy the project requirements like it is having inbuilt GPU and it supports image processing which is most important and useful for this project.

Thanks for Renesas for providing Camera and Audio shield for this board, camera is used to capture images and videos along with the input from Ultrasonic sensor, basically it gives the distance from the object. Camera and ultrasonic are combindly gives input data to process.

Vibration motors and Audio out or earphone will gives the information from the captured input data; this information is basically in form of audio and motor vibration to inform the obstacle distance from the person.

GPS module will track the location of the person and also useful to give useful information like street name or place name based on the coordinates.

IOT module can be connected to monitor or track the person or to send urgent message in case if the person need any help or having some trouble.

Concept and Challenges

Concept: Camera will take images or videos and those images will be processed in the GR peach for detecting objects. Here real challenges are to detect object and the algorithm for the same.

The captured data will be processed further and generate audio output signal for the blind person, vibration motors will vibrate based on the distance of the object or object is coming closer or going far.

Features

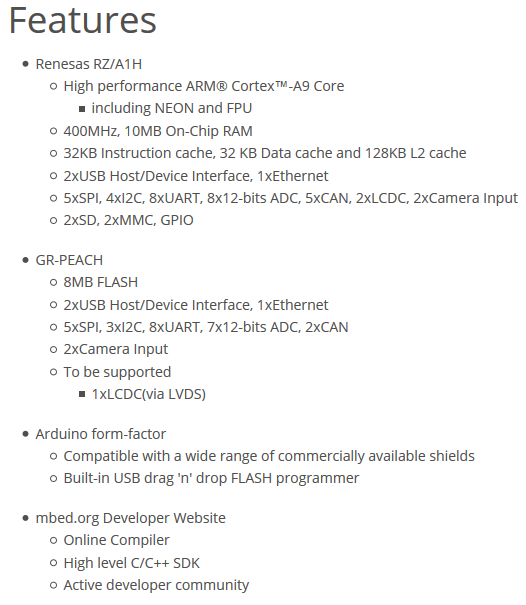

GR Peach Board: Basic features of the board are listed below.

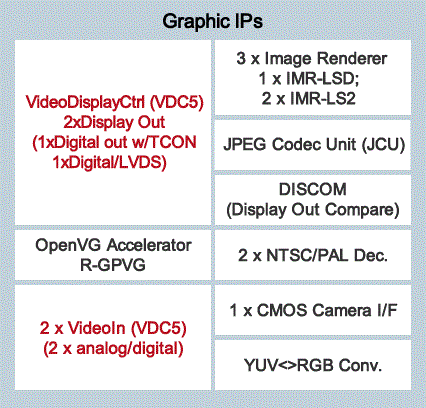

Graphics: Image processing IPs and Audio video decoders are inbuilt in the Microcontroller.

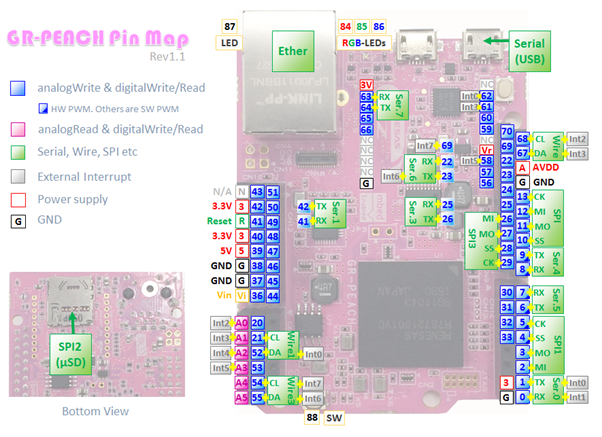

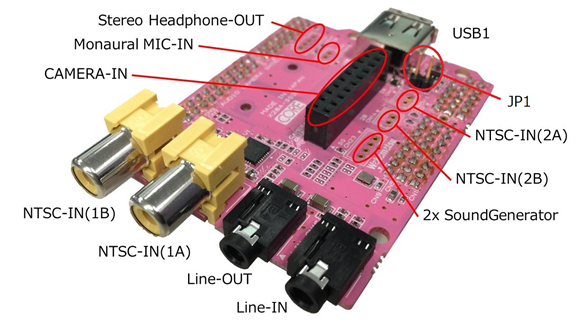

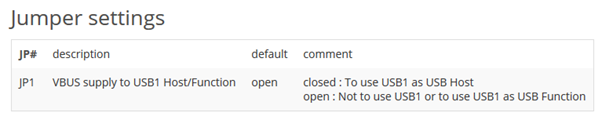

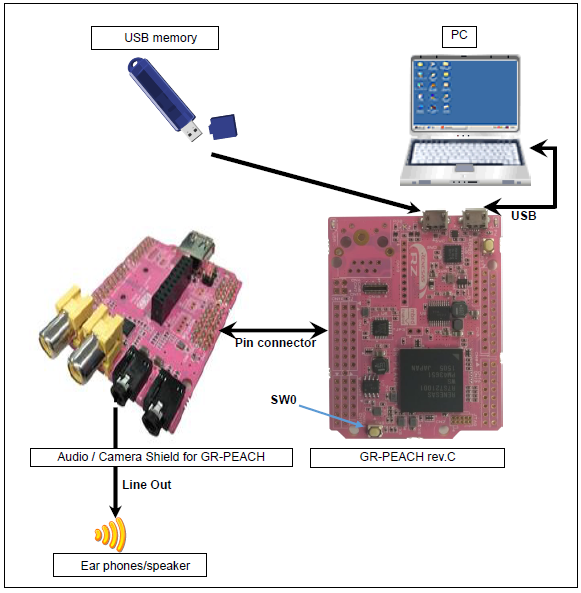

Schematic and Hardware Setup

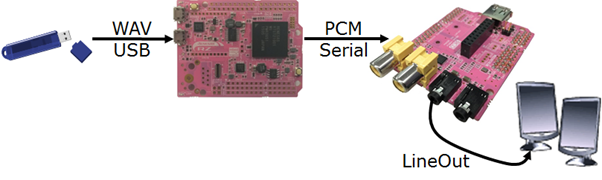

Audio Shield Connection:

Ultrasonic Sensor:

GPS : Gps module need an UART channel to send coordinates.

Vibration Motors : 2XGPIO pins are needed to connect.

Object Detection and Image Processing

This part was very challenging to us, there are also lot of research on object detection is already going on and some algorithms are already implemented so we decided to understand the existing one and design then as per project requirement.

Idea was to generate C code from MATLAB design for GR-PEACH. Below are the MATLAB design algorithm for object detection and Image processing.

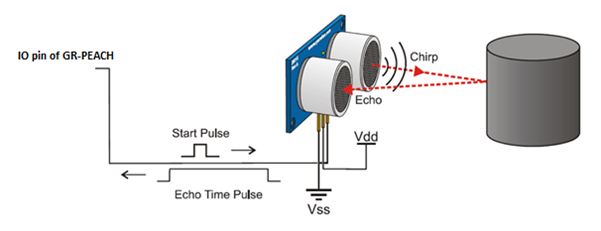

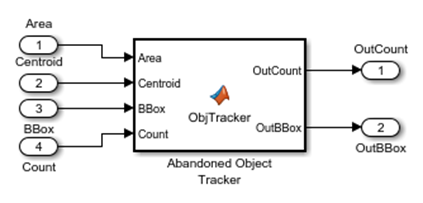

a.Abandoned Object Detection

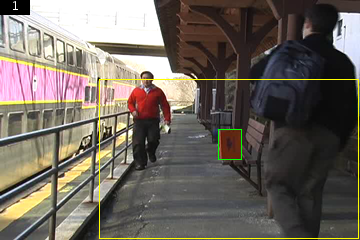

Matlab Mathworks provided an algorithm block how to track objects at a train station and to determine which ones remain stationary

This block illustrates how to use the Blob Analysis and MATLAB® Function blocks to design a custom tracking algorithm. The example implements this algorithm using the following steps:

1) Eliminate video areas that are unlikely to contain abandoned objects by extracting a region of interest (ROI).

2) Perform video segmentation using background subtraction.

3) Calculate object statistics using the Blob Analysis block.

4) Track objects based on their area and centroid statistics.

5) Visualize the results.

Source: https://in.mathworks.com/help/vision/examples/abandoned-object-detection.html

The All Objects window marks the region of interest (ROI) with a yellow box and all detected objects with green boxes.

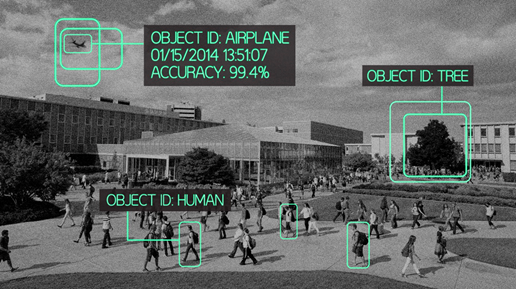

b. A smart-object recognition algorithm that doesn’t need humans

BYU engineer Dah-Jye Lee has created an algorithm that can accurately identify objects in images or video sequences — without human calibration.

http://www.kurzweilai.net/a-smart-object-recognition-algorithm-that-doesnt-need-humans

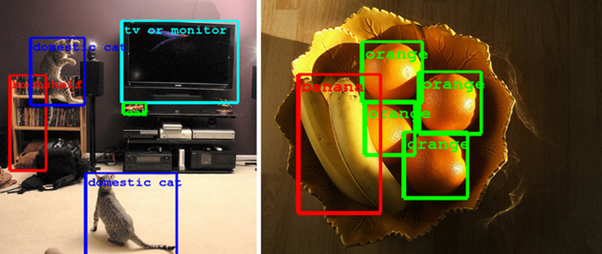

c.Improved Scene Identification and Object Detection on Egocentric Vision of Daily Activities

http://crcv.ucf.edu/projects/cviu2016/

d. Detecting and Segmenting Humans in Crowded Scenes

Software Design

GR-Peach board support Arduino and Mbed environment so we used some readily available libraries with our board, only configuration part we have changed as per project requirement.

We have compiled complete project in MBED Web compiler. Below is the software architecture .

/edDir/files/GR-PEACH_Code.rar

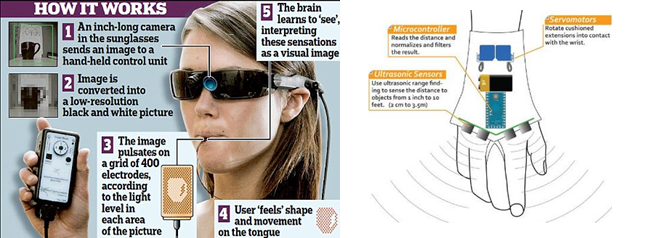

Application

It can be used as a wearable device like Specs, or a wearable device in the hand.

(Image curtsey : Goole)

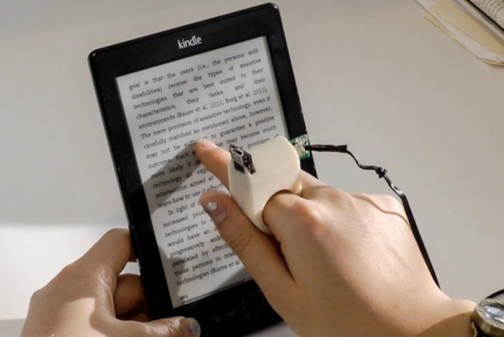

Future scope and Enhancement

a. Finger-mounted reading device for the blind :

Audio feedback helps users can finger along a line of text, which software converts to speech.

http://news.mit.edu/2015/finger-mounted-reading-device-blind-0310

Study and Reference

a. GR Peach Board Study and Hello world code test

https://developer.mbed.org/platforms/Renesas-GR-PEACH/

https://developer.mbed.org/teams/Renesas/wiki/GR-PEACH-Getting-Started

b. Video and Audio shield interfacing

https://developer.mbed.org/teams/Renesas/wiki/Audio_Camera-shield

https://developer.mbed.org/teams/Renesas/code/GR-PEACH_WebCamera/

https://developer.mbed.org/teams/Renesas/code/GR-PEACH_Audio_WAV/

c. Image processing algorithms with MATLAB study

https://in.mathworks.com/help/vision/examples/abandoned-object-detection.html

http://www.kurzweilai.net/a-smart-object-recognition-algorithm-that-doesnt-need-humans

http://crcv.ucf.edu/projects/cviu2016/

d. Market product and History

http://www.medgadget.com/2011/08/build-your-own-arduino-sonar-for-the-visually-impaired.html

http://news.mit.edu/2015/finger-mounted-reading-device-blind-0310

Finalist on GR-PEACH design contest in India 2016